AI tools for tldr for video

Related Tools:

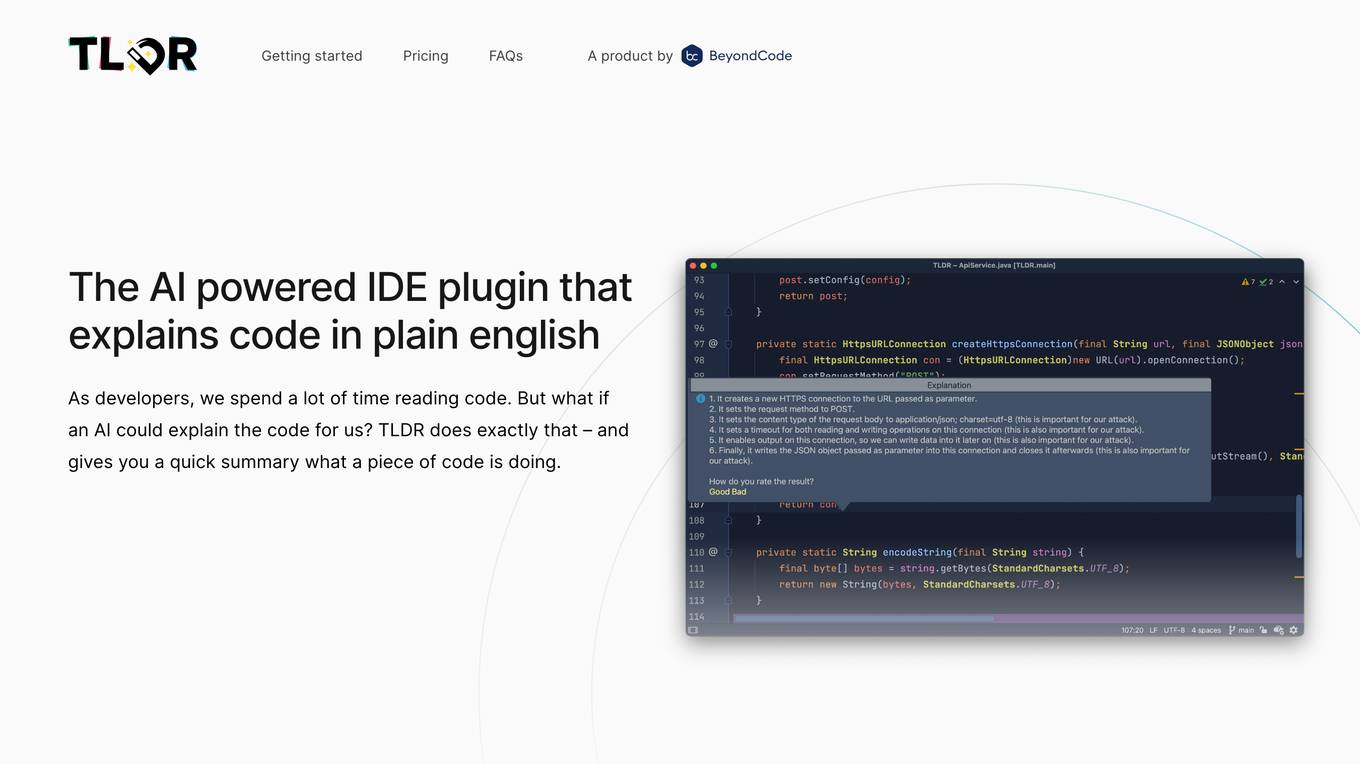

TLDR

TLDR is an AI-powered IDE plugin that explains code in plain English. It helps developers understand code by providing quick summaries of what a piece of code is doing. The tool supports almost all programming languages and offers a free version for users to try before purchasing. TLDR aims to simplify the understanding of complex code structures and save developers time in comprehending codebases.

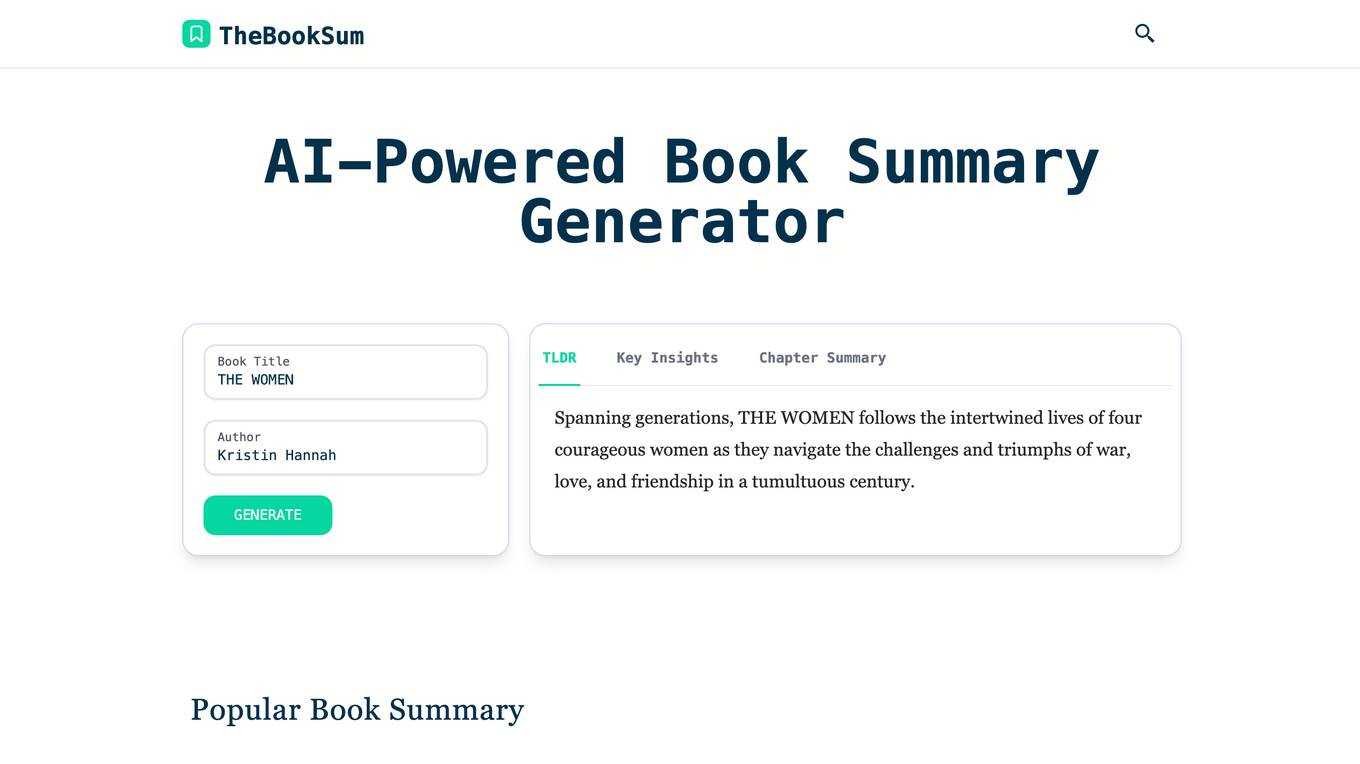

TheBookSum

TheBookSum is an AI-powered tool that provides instant book summaries. It helps users to quickly grasp the core of literature across genres efficiently and deeply. With just a click, users can input the title and author of the book they wish to summarize, and TheBookSum's AI will generate a detailed summary that captures the essence of the book. The summaries are divided into clear segments for easy understanding, and they extract and explain the main concepts and keywords from books, aiding in grasping central themes and terms. TheBookSum is free to use and can summarize a broad spectrum of books, ranging from fiction and non-fiction to technical guides and more.

ReaderMax

ReaderMax is a Chrome extension that uses AI to summarize news articles and other text-based content. It helps users save time and improve their reading comprehension by providing concise, easy-to-understand summaries of long and complex articles. ReaderMax is available as a Chrome extension and a side panel in the Chrome browser.

Ask Occam

Uniquely concise and decisive. Transforms complex problems into simple, clear answers. Ideal for informing quick decisions and rapid top-down learning of new subjects. You may also type "@Ask Occam" in an existing chat to obtain a TLDR summary or a definitive conclusion.